Machine learning is now a core part of almost every modern business, but building a great model in a notebook is only the beginning. The real challenge starts when you need to run that model reliably in production, day after day, for thousands or millions of users. This is where MLOps deployment comes in.

Machine Learning Operations ( MLOps )is an engineering discipline that aims to unify machine learning system development and machine learning system operations. It focuses on automating and streamlining the processes of deploying, monitoring, and maintaining ML models in production environments. The goal is to enhance the quality, speed, and consistency of deploying machine learning solutions.

Whether you’re deploying recommendation engines, fraud detectors, or generative AI tools, a solid MLOps foundation turns experimental models into dependable business assets. This guide walks you through the essentials—tools, strategies, and practical steps—so you can deploy with confidence.

At first glance, MLOps looks a lot like DevOps—and it should, because it builds on the same foundation of automation and collaboration. However, machine learning brings extra challenges that regular software doesn’t have.

MLOps relies on a range of tools and services designed to facilitate various aspects of the machine learning lifecycle. These include:

1. Data Versioning Tools: DVC (Data Version Control) and Delta Lake manage data changes and enable reproducibility.

2. Model Training and Experimentation: Platforms like MLflow and Kubeflow assist in tracking experiments, managing the model lifecycle, and serving models.

3. Model Deployment: TensorFlow Serving, TorchServe, and Microsoft Azure ML provide robust frameworks for deploying and managing ML models.

4. Monitoring and Operations: Tools such as Prometheus and Grafana are used for monitoring the operational aspects, whereas Evidently AI focuses on monitoring model performance.

These tools integrate with traditional CI/CD pipelines to enhance the deployment and maintenance of ML models in production environments.

Choosing between server-less compute and dedicated servers is critical in MLOps for deploying machine learning models. Server-less computing offers a way to run model predictions without managing server infrastructure. It scales automatically, is cost-efficient for sporadic inference needs, and reduces operational burdens. AWS Lambda and Google Cloud Functions are popular server-less platforms. On the other hand, dedicated servers provide more control over the computing environment and are beneficial for compute-intensive models requiring high-throughput and low-latency processing. Dedicated servers are preferred for continuous, high-load tasks due to their predictable performance.

When dealing with compute-intensive models, such as generative models, the following tips can help in setting up effective MLOps infrastructure:

1. Leverage GPU Acceleration: Utilize GPU instances for training and inference to handle high computational requirements efficiently.

2. Use Scalable Storage: Implement scalable and performant storage solutions like Amazon S3 or Google Cloud Storage to manage large datasets and model artifacts.

3. Implement Load Balancers: Use load balancers to distribute inference requests evenly across multiple instances, ensuring optimal resource utilization and response time.

4. Automation: Automate resource scaling to handle varying loads without manual intervention, ensuring that resources are optimized for cost and performance.

Continuous learning pipelines are designed to automatically retrain and update models based on new data. This is essential in dynamic environments where data patterns frequently change, leading to model drift. A continuous learning pipeline typically involves automated data collection, data preprocessing, model retraining, performance evaluation, and conditional deployment. Tools like Apache Airflow or Prefect can be used to orchestrate these pipelines, ensuring that models remain relevant and perform optimally over time.

Monitoring is crucial in MLOps to ensure that deployed models perform as expected. Continuous monitoring pipelines focus on: Performance Metrics, Model Drift Detection, and Operational Metrics. These metrics are vital for proactive maintenance and ensuring that the ML systems deliver consistent, reliable results.

MLOps is a sophisticated field that bridges the gap between machine learning and operational excellence. By utilizing appropriate tools and strategies, organizations can ensure that their machine learning models are not only accurate but also robust and scalable. As machine learning continues to evolve, MLOps will play an increasingly critical role in the deployment and management of AI-driven systems.

At AxcelerateAI, we build end-to-end MLOps pipelines so you can focus on the model, not the plumbing. Ready to move your AI from notebook to production without the headaches? Partner with a best AI agency that prioritizes MLOps from conception through deployment.

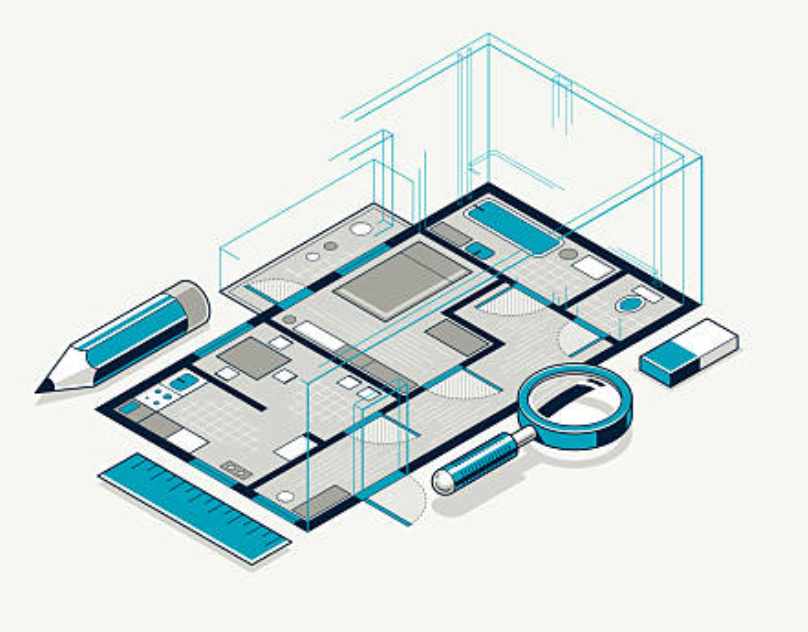

Unlock PropTech automation. Learn how our custom AI uses Computer Vision and geometric reasoning to extract data from floor plans, reducing costs.

.png)

Automate grading, curriculum mapping, and student records. See 5 top use cases where IDP and OCR transform academic operations.

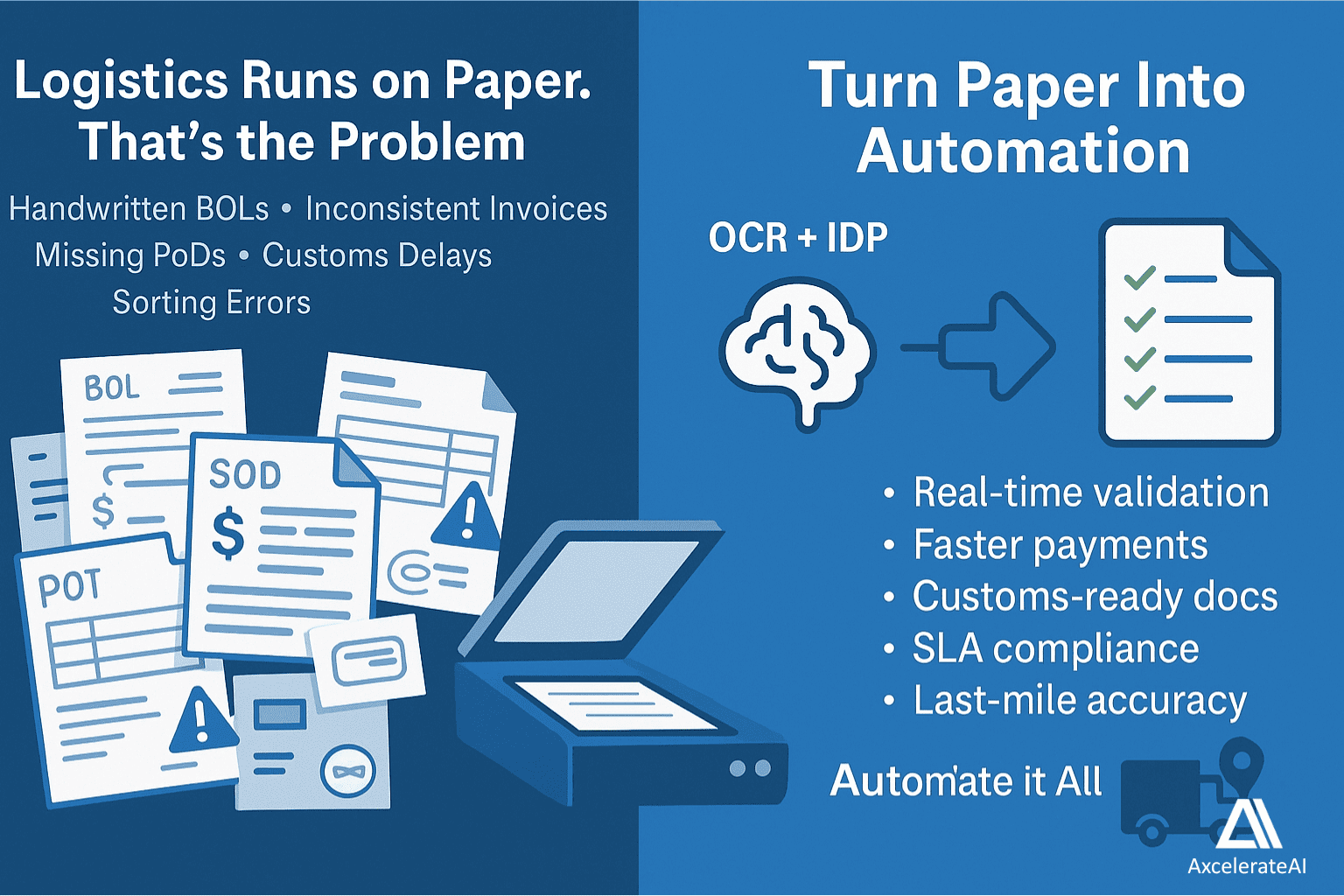

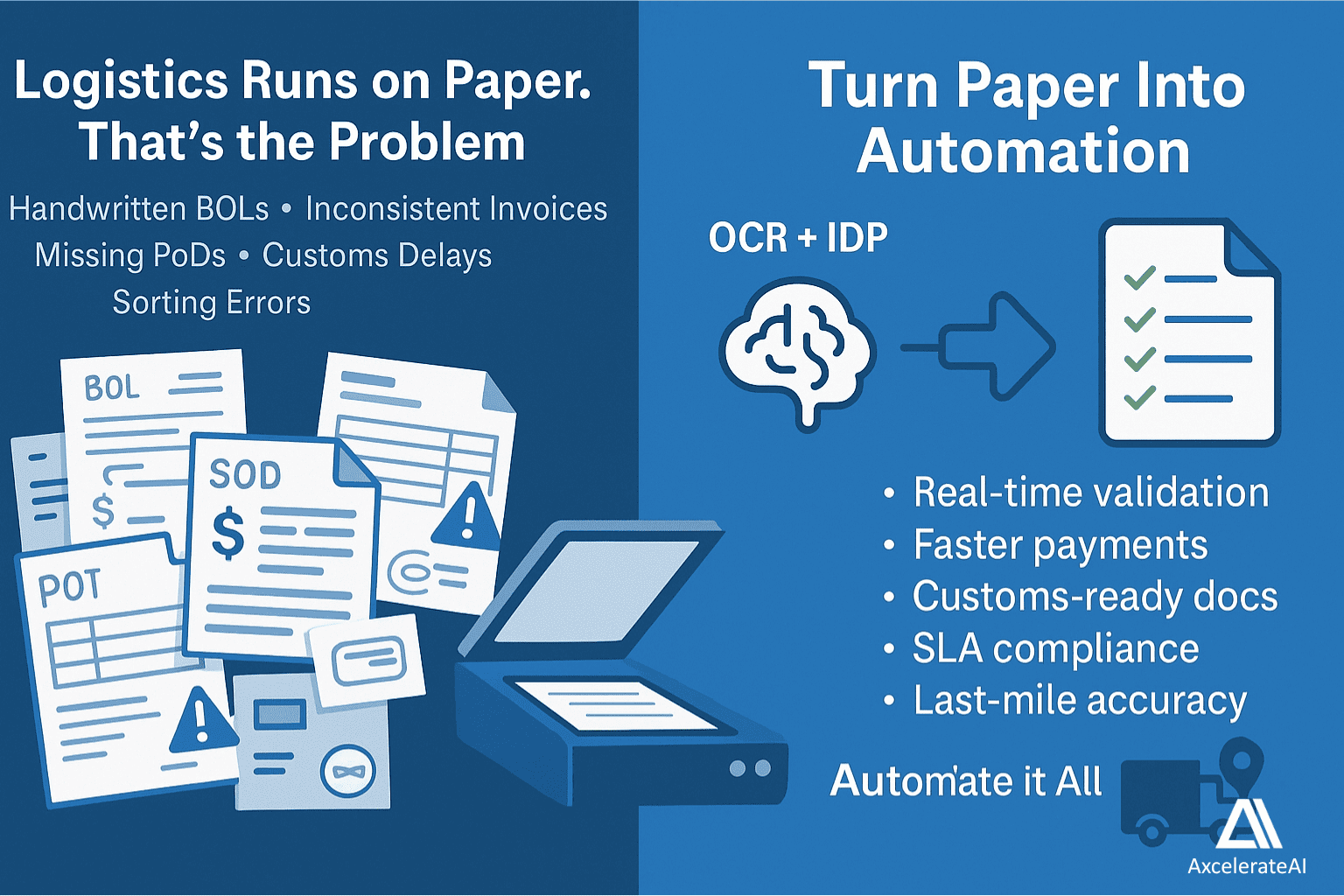

Unlock logistics efficiency with OCR and IDP: Automate inventory, supply chain tracking, and compliance. See real examples from DHL and Maersk.

Unlock PropTech automation. Learn how our custom AI uses Computer Vision and geometric reasoning to extract data from floor plans, reducing costs.

.png)

Automate grading, curriculum mapping, and student records. See 5 top use cases where IDP and OCR transform academic operations.

Unlock logistics efficiency with OCR and IDP: Automate inventory, supply chain tracking, and compliance. See real examples from DHL and Maersk.