Large language models (LLMs) have significantly transformed the way we interact with information and automate tasks. These massive foundational models, trained on trillions of tokens of publicly available data, exhibit remarkable general reasoning and language generation capabilities. However, their incredible strength is also their weakness: they are closed systems with two fundamental limitations that prevent their use in enterprise environments: the knowledge cut-off and the tendency to hallucinate.

The issue is critical when dealing with proprietary or timely information. For example, suppose that you run a financial consultancy business and have internal, private data—client contracts, private research, or recent market reports—that is not published anywhere on the internet. This means the LLMs did not have access to that data during training. This is where retrieval augmented generation (RAG) systems come in, which allow you to seamlessly connect LLMs with your own knowledge databases. By providing up-to-date, verified context, RAG instantly transforms a static LLM into a dynamic, accurate knowledge worker.

This approach combines the generative powers of LLMs with information retrieval abilities typical of search engines. By doing so, RAG enables models to access a wealth of external information during the generation process, leading to more informed and contextually accurate outputs. This method is in sharp contrast to the standard approach where LLMs generate responses based solely on their pre-trained data, which often results in misleading or fabricated answers.

The primary reason organizations adopt this technique is to overcome the two major limitations of foundational models: Knowledge Cut-Off and Hallucination. LLMs are only knowledgeable up to the date their training data was finalized, meaning they cannot answer questions about recent events or, more critically, your private business documents. When models lack the required data, they often generate confident-sounding but factually incorrect information. This critical function directly addresses the core problem of LLM hallucination by providing verifiable external context, making the output reliable and traceable to a source document. This grounding is the key differentiator for enterprise adoption.

The RAG process is divided into a Retrieval Phase and a Generation Phase, which are orchestrated through specialized tools:

LLMs have a strict limit on the length of an input prompt. If you have a large knowledge base, you cannot directly feed all of it to the model. The first step of the RAG process involves preparing and querying your data:

Next, the RAG framework combines the context (the relevant chunks retrieved) and the user's original query. This augmented prompt is fed to an LLM (such as GPT or Llama), instructing it to generate an answer based only on the provided context. This crucial step prevents the model from relying on its internal, potentially outdated, knowledge, thereby eliminating the source of misinformation.

RAG is a foundational technology that powers numerous high-ROI applications:

While the benefits are substantial, organizations must navigate several technical hurdles when setting up a robust system:

Successfully implementing a robust RAG system that works reliably at scale requires specialized expertise beyond simple API calls. It involves complex decisions regarding data chunking strategy, choice of embedding models, vector database optimization, and setting up scalable MLOps infrastructure.

Building and deploying these sophisticated RAG solutions is a key deliverable within ai chatbot development services. Companies require partners who can handle the entire stack, from custom data ingestion and processing to continuous monitoring and maintenance in a secure production environment.

The implementation of Retrieval-Augmented Generation is a decisive factor in unlocking the true power of LLMs for enterprise use cases. By solving the critical issues of knowledge cutoff and factual inaccuracy, RAG ensures that AI applications are grounded, reliable, and capable of driving substantial business value. If you're ready to move past generic LLMs and connect your private data to Generative AI, contact us to discuss your RAG implementation and stop the issue of LLM hallucination in your organization.

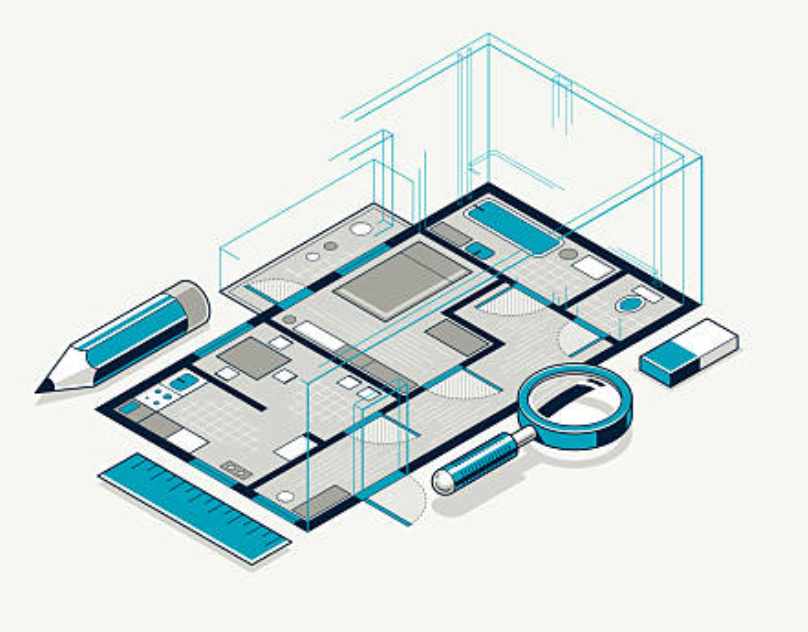

Unlock PropTech automation. Learn how our custom AI uses Computer Vision and geometric reasoning to extract data from floor plans, reducing costs.

.png)

Automate grading, curriculum mapping, and student records. See 5 top use cases where IDP and OCR transform academic operations.

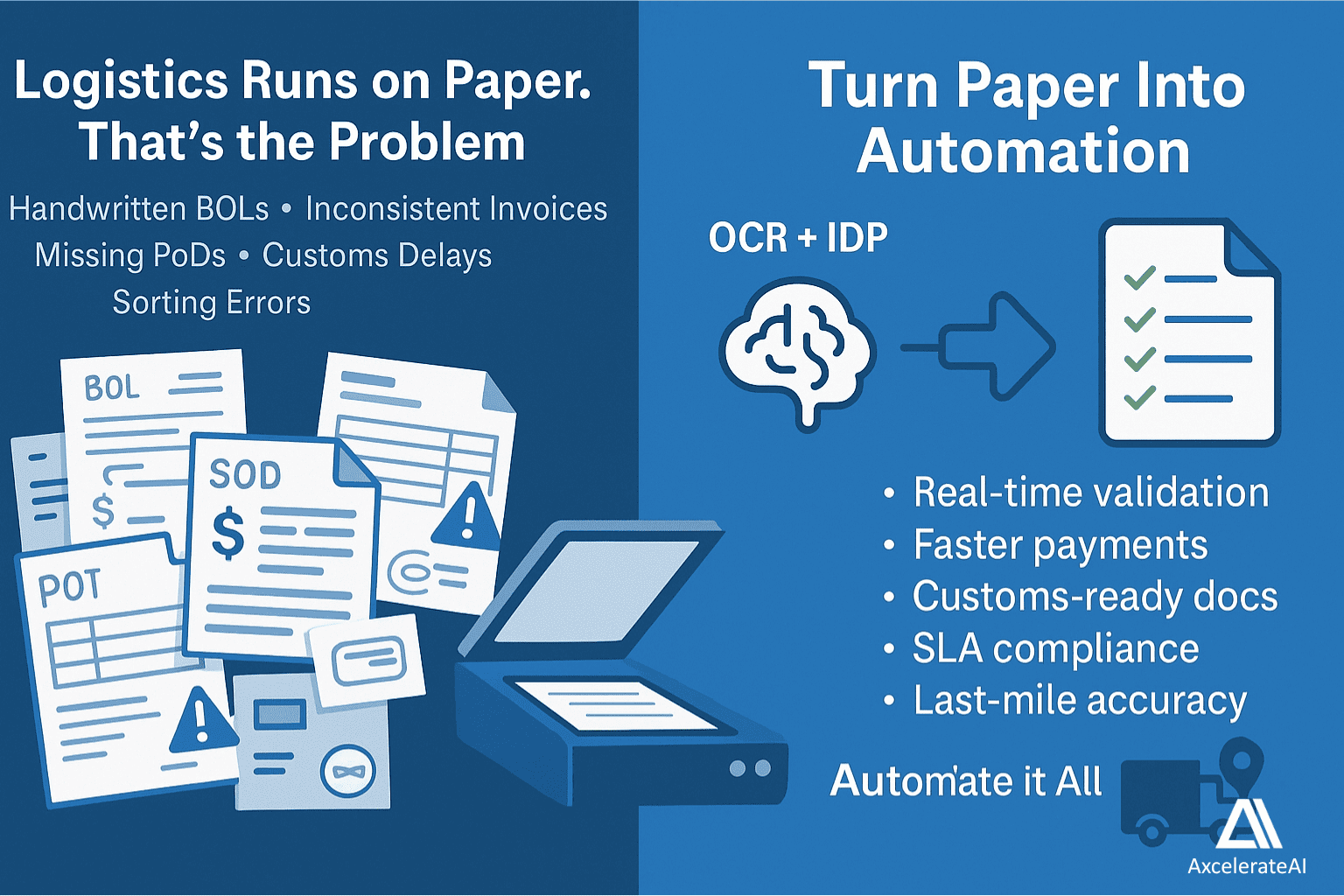

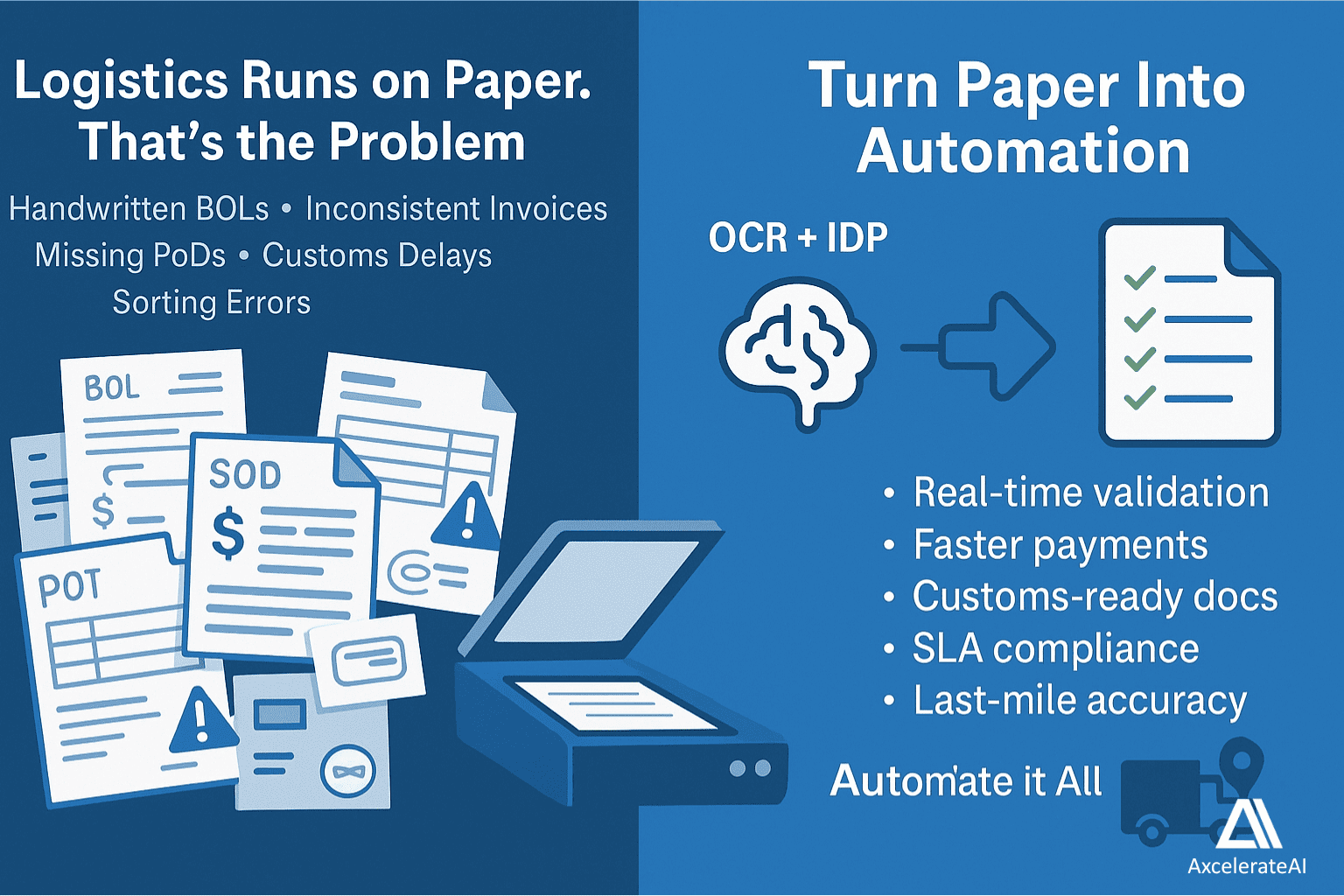

Unlock logistics efficiency with OCR and IDP: Automate inventory, supply chain tracking, and compliance. See real examples from DHL and Maersk.

Unlock PropTech automation. Learn how our custom AI uses Computer Vision and geometric reasoning to extract data from floor plans, reducing costs.

.png)

Automate grading, curriculum mapping, and student records. See 5 top use cases where IDP and OCR transform academic operations.

Unlock logistics efficiency with OCR and IDP: Automate inventory, supply chain tracking, and compliance. See real examples from DHL and Maersk.